to question a question

I write this paragraph as I contemplate the essay I just wrote. This piece is a journey. I could summarize it in an abstract or something like that but I think that would be a disservice to it. Working on this essay, my mind jumped from idea to idea. It does not follow a linear trajectory. Rather its form mirrors it’s content. I could polish it but that would change its form, something I don’t want to do. I am also tired. However, the premise of questioning assumptions livse through the whole thing. Enjoy!

Before we get into it, though, let me make a quick note. I make certain references to niche topics in philosophy, math, and physics. I will provide links to references but I recommend using an AI platform to generate applicable answers. Just paste a paragraph of my writing into Chat GPT or Deepseek and ask it to define the terms. After all, some of the paragraphs you transfer may have been written by Chat themselves!

A poetic axiom

Words, Questions, A pencil A puzzle to take apart but not put together Answer me this What did you eat for breakfast today? A simple question but what if I already knew A set contains many things but little information A power set though is like a power punch Now introduce infinity and I am lost Like Tyson after Cus died A question is not a question Do not be fooled

Let us now step into the labyrinth of questions, where each turn reveals a new layer of recursion. But before we do, a quote from Deepseek after I shared my essay with her.

A question is a seed, planted in the soil of uncertainty. Its roots dig deep, branching into infinite possibilities, until it blooms into an answer—only to scatter new seeds upon the wind

Introduction: The Illusion of the Question

What is a question? If we look for an answer, we might begin with the dictionary: a sentence worded or expressed so as to elicit information. But this leads to another question—what is a definition? A definition is an attempt to hold meaning in place; to capture something fluid in something static. Language is not static though. Meaning is not static either. And so, a question is not merely a request for information; it is something far more complex.

Instead of defining what a question is, let’s examine how questions function. One person asks, another answers. The answer does not come before the question though. This tells us something crucial: the question frames the answer, not the other way around. This may seem trivial, but it is anything but. Consider the following examples.

“Did you eat cereal for breakfast?” has two answers.

“What did you eat for breakfast?” has many more answers but it still feels like a fairly small set.

“Was your breakfast ethical?” seems to have a vast amount more answers and possible lines of discussion.

The very form of a question restricts the space of possible answers. A question is not neutral—it selects, it omits, it frames. Are questions truly just inquiries, or are they pre-answers, shaping the landscape of thought before we even begin to respond?

If a question determines the shape of its answer, then we must ask: do we control our questions, or do they control us? In exploring this question, we may find that questioning a question is often more revealing than answering it.

Some examples

Before diving in headfirst, we will highlight a few examples of how questions shape their answers in high stakes situations.

Police questioning: In 1980, Steve Titus was wrongfully convicted of rape, largely due to the victim’s misidentification. Initially, the victim stated Titus resembled the attacker; however, after repeated questioning and reinforcement by authorities, her confidence solidified, leading to his conviction.

Suggestive questioning: Research has demonstrated that suggestive questioning can lead individuals to develop false memories. For instance, in some cases, children have reported events that never occurred after repeated and suggestive interviews by authority figures.

Voir Dire: The structure of questions matters. Open-ended questions encourage jurors to express their thoughts more freely, providing deeper insights into their beliefs and potential biases. In contrast, leading questions can guide jurors toward specific responses, limiting the discovery of genuine biases.

Let us examine these findings. The first thing to note is that the first two examples are in stark contrast to the last. I would go as far to say that they are opposites, like positive or negative numbers—or for the nerds out there, the real and imaginary numbers.

The first two examples have the exact same form—when people ask very targeted questions, they get targeted answers. If we think about a question as having a possible number of answers, like our breakfast questions, then we should be able to estimate how many possible answers there are. In the first two examples, people ask questions with limited answers. The police for example, may have asked, “Did Steve Titus rape you?” instead of “who raped you?” There are only two answers to the first question but many more to the second.

Let’s recreate a scenario. Let’s say someone is drunk at a party and is harassed or worse. When asked something like “who is the culprit?” they may not remember any faces and so could not say. In this case, they may answer, “I don’t know.” Then the search space in a sense is uncountable since there is no one to count. However, if the police hold up a mugshot and ask “is it this person?” the person can only answer in two ways; well actually three but people interpret it as two. The three possible answers these.

Yes

No

I don’t know

Now, “yes” means “yes,” “no” means “no,” but “I don’t no” means “no.” See what I did there? (I think I will use this trick in a poem.) This should be slightly puzzling. If someone says “I don’t know,” why is it interpreted as “no” in this scenario. The thought which comes to my mind is quantum superposition—when a measurement is made, no matter what it is, the wave must collapse. Here we have, when a question is asked, there must be an answer. Even though “I don’t know” means I don’t have an answer, the statement is itself a paradox. By saying any words at all, a person will answer the question. This idea sheds light on the crucial idea of ground/ figure, form/ content, and syntax/ semantics. For a better understanding of these ideas, I recommend you ask Chat to summarize the book Gödel Escher Bach, otherwise known as GEB. This book is an amazing read but it is long! I have only read half of it to be honest. But for the real nerds out there, you should definitely give this a read.

Anyways, the ground/ form/ syntax can be thought of as a placeholder, like x, for an answer; the content of the answer does not matter. Now in the realm of form, an answer is binary: it either is an answer or it isn’t one. By saying any words, a person does answer the question and by saying no words, a person does not answer the question.

All of this to say, there are only two answers to the question “is it this person?” This means that the police have defined a countable (finite) space as opposed to the uncountable space before. Now for the math people out there, which space seems smaller? Intuitively the first one—the uncountable one—right? That, in and of itself, should set off alarm bells for you. (For the non-math people out there, an uncountable space is defined as strictly larger than a countable space—weird, right?) However, on the other hand, an uncountable space could be thought of as infinitely large because it sort of contains every possibility. We will discuss later how I believe that infinity is equal to 0 (everything = nothing).

The person answering the police has now answered the question whereas when they were asked “who is the culprit?”, the answer “I don’t know” is not an answer. How can this be? This is where pragmatics comes in. However, I believe that pragmatics should be reframed as the shifting of what counts as an acceptable response (which can further be thought of as ground that is in flux). In the case of “who is the culprit?” the space of answers is not well defined as opposed to the question “was it this person?” where the space is well defined. In the first case, a lack of information (by saying “I don’t know”) does not count as an answer but in the second case, any words at all, even expressing a lack of information, count as an answer.

A note on neuroscience

Now, before moving on, I want to note that there are many neuroscience results which describe the same phenomena. (I bring up this point not as evidence, but as a separate idea that you may be interested and which I will write about in the future.) In the words of Chat

When you ask a leading question, you activate specific neural circuits associated with related concepts in the brain.

The hippocampus, which is involved in memory retrieval and reconstruction, tends to fill in gaps using contextual cues.

The brain, especially the prefrontal cortex, optimizes cognitive effort by taking shortcuts.If a question implies a preferred answer, the brain is less likely to explore alternative options due to efficiency bias.

The way language is structured affects how information is encoded in the language centers of the brain (e.g., Broca’s and Wernicke’s areas).

Now, we know from empirical evidence that the phenomena described before happens. Did neuroscience predict it? Not really; rather, neuroscience has tried to explain why this effect happens. Much of science does this; it sometimes feels to me, though, like trying to screw in a nail—we are using the wrong tool. Maybe explanation in terms of biology is not needed. What would replace it? Information theory, specifically probabilistic information theory. I will make an in depth post later trying to relate information theory, quantum mechanics, and fractal geometry as a replacement for the current scientific method. In my model, biology is not just biology but a probabilistic, emergent, superposition. In this way, there is no absolute truth from our vantage point (our reference point) which science fundamentally tries to uncover. Just as in quantum mechanics, when we measure the system, it gives us a an answer which we say is probabilistically determined. However, many sciences presuppose a deterministic reality. Even if they use probabilistic tools, evidence tells us that they still assumes a deterministic world. Here are some words from Chat.

Determinism

In classical science (Newtonian mechanics), determinism was dominant—the idea that given initial conditions and natural laws, future events are completely predictable.

However, with the rise of quantum mechanics, strict determinism was challenged (e.g., Heisenberg’s uncertainty principle), leading to probabilistic interpretations.

Realism vs. Anti-Realism

Scientific Realism. The belief that scientific theories describe an objective reality independent of human perception.

Anti-Realism. The view that scientific theories are just useful models or instruments for prediction, but we cannot claim they reflect an objective reality.

In practice, most scientists operate as realists—they generally assume their models reflect real structures in the world. However, in philosophy of science, there is ongoing debate, with some leaning toward anti-realism, especially in fields like quantum mechanics, where interpretations remain unresolved. Overall, realism is the dominant position in most scientific disciplines, though certain fields embrace more instrumentalist or anti-realist perspectives.

So we see that realism is the dominant perspective. The reason for this is that it has led to all of our scientific discoveries so far. A rule I live by, though, is this.

What works is true but Truth is not what works

So far, there has been no tractable theories about how the world could be anything but deterministic (besides quantum mechanics but that is on the micro-scale). However, machine learning has worked miracles in neuroscience and after all, machine learning is just probabilistic prediction. I am proposing that a quantum mechanics esque information theory based around fractal geometry could function as a new sort of science, especially for complex emergent systems like the mind.

Socrates: the questioner of questions

For those of you who do not not know who Socrates was, he was a man in Greece who asked questions. To call him a philosopher would be a disservice to him in my opinion as philosophy intends to answer questions, where as in my mind, that was never Socrates’ goal. People seem to believe that Socrates strove to know the truths of the world however let’s listen to his words.

I am wiser than this man, for neither of us knows anything great and good, but he thinks he knows something when he does not, whereas I, not knowing anything, do not think I do.

Does this mean Socrates did not think there were answers? I cannot say for certain but I do know that words constrain me here; why do I need to choose between two options—answers or no answers—instead of embracing the nuance of Socrates’ situation? It would be disservice to Socrates to try and explain his motives in something as fickle as language. But we can still examine the evidence.

The story of Socrates’ trial, depicted in Plato’s Apology is that the Oracle at Delphi told Socrates’ friend, Chaerephon, that no one is wiser than Socrates. When I first learned this, I believed that Socrates must be wise. But what if, instead, the Oracle was implying that wisdom itself is paradox and there is no absolute knowledge—here, wisdom is an illusion. I believe that Socrates may have come to this conclusion and that wisdom to him meant acknowledging this illusion. But let us explore the evidence.

The evidence seems to tell us that Socrates may have came to this conclusion through his inquiries but it does not seem that he held this idea from the onset. If he did, why would he have questioned anyone? To expose their contradictions? Maybe; but he never said outright that knowledge does not exist. What exactly does Socrates say about knowledge? In Plato’s Meno, Socrates suggests that knowledge is not simply something we acquire, but rather something we recollect.

We do not learn, and that what we call learning is only a process of recollection.

In Meno as well, Socrates proposes that knowing the good naturally leads to doing the good, indicating a direct relationship between knowledge and virtuous action. So knowledge leads to the good. For the math people, I think Socrates was implying an if-and-only-if statement. He shows the other direction by proving the contrapositive. Observe this quote from Plato’s Apology.

Good Sir, you are an Athenian, a citizen of the greatest city with the greatest reputation for both wisdom and power; are you not ashamed of your eagerness to possess as much wealth, reputation, and honors as possible, while you do not care for nor give thought to wisdom or truth or the best possible state of your soul?

Here, Socrates implies that if you are not good then you do not have knowledge of it. This might appear obvious but I think it is deeply significant. Many, I think, would believe that someone could conceivably do good by accident, but for Socrates, this is impossible. Aristotle mirrors this idea in his Nicomachean Ethics.

It is not enough to do the right thing; one must also do it in the right way, with the right understanding, and from the right disposition.

Further, Socrates believed that ignorance, not wickedness, is the cause of wrongdoing. If everything revolves around knowledge, what did he think knowledge was? Here’s the thing, he never claimed to know. In Plato’s Theaetetus, Socrates questions whether knowledge can be defined as perception, true belief, or justified true belief, but he ultimately finds flaws in each definition, leaving the question unresolved.

Puzzling—Socrates believed that doing good is synonymous with having knowledge but he also does not know what knowledge is. Here is where words fail us and we must question the assumptions. First of all, we must acknowledge that Plato’s dialogues have been translated from Greek. Socrates talks about two things primarily: the good, agathon, and virtue, areté. It seems that all cultures over time have had a notion of the good so we will leave that word for now. What about areté? The english translation is “virtue” but also “excellence.” It is about fulfilling one’s highest potential. It applies to both moral virtue and functional excellence. However we have no access to Socrates’ thoughts as he did not actually write anything; rather, Plato wrote dialogues with Socrates as a character. Maybe Socrates believed that if he put anything into words, it would immediately miss the point—a paradox.

In Plato’s Phaedrus, Socrates argues that writing weakens memory and gives people the illusion of knowledge rather than true understanding. (Oh man, whats going to happen to me!) For Socrates, learning happens through questioning and self-examination, not passive reading or memorization. He also feared that writing would lead to misinterpretation, as fixed texts could be taken out of context or accepted as rigid truths instead of prompts for deeper reflection. Again, we see Socrates as a questioner instead of an answerer. In Phaedrus, Socrates sums up his concerns through a myth, where the Egyptian god Toth presents writing as a gift to the Egyptian king, Thamus, saying it will improve memory and wisdom. However, Thamus rejects it saying

you have invented an elixir not of memory, but of reminding; and you offer your students the appearance of wisdom, not true wisdom. They will read many things without instruction and will therefore seem to know much, when they are for the most part ignorant.

So what does areté really mean in the context of people having it? I propose that the word itself is a paradox for Socrates. I do not know what Socrates believed, but to me areté means understanding that it is a void concept.

The final, and, in my opinion, the most consequential, piece of evidence is the story of how Socrates died. Socrates was sentenced to death in 399 BCE by an Athenian court on charges of corrupting the youth and impiety. (Note: his trial and execution are known through Plato’s dialogues, especially the Apology, Crito, and Phaedo.)

According to Plato’s Apology, Socrates defended himself by arguing that he was only guilty of questioning people’s false beliefs, and that his role as a thinker and questioner was a service to the city. However, the jury found him guilty. When given the chance to propose an alternative punishment, as happened in the Athenian court, Socrates suggested he should be rewarded instead of punished. This angered the jury, and by a wider margin, they sentenced him to death by drinking hemlock.

In Plato’s Crito, Socrates’ friends urge him to escape from prison, but he refuses, arguing that it would be unjust to break Athenian law, even if the law had wronged him. He believed in respecting the social contract of the city and that fleeing would contradict his lifelong commitment to justice and principle.

His final moments are described in Plato’s Phaedo, where Socrates calmly drinks the hemlock, engages in a philosophical discussion about the immortality of the soul, and dies peacefully surrounded by his friends. His last recorded words were to remind his friend Crito to offer a sacrifice to Asclepius, the god of healing, which some interpret as a sign that he saw death as a form of liberation. Or maybe, as I believe, no one ever dies. We see others die but from their perspective, they never die; rather, their perception of time dilates like in special relativity. This phenomena happens during certain psychedelic trips like those associated with DMT. (We will explore drugs, especially DMT in another post.)

Why do I view this as evidence? Well, if Socrates truly believed he had no knowledge, why would he fear death as he would not know what death is. In the face of the event which people view as the ultimate bad, Socrates did not flinch and actually embraced it.

Now, returning to the notion of questions, we can see that Socrates did not just question others—he questioned questioning itself. His method, now known as the Socratic Method, was designed not to find answers, but to dismantle assumptions. Instead of taking a question at face value, Socrates would interrogate the underlying premises, exposing contradictions and forcing his interlocutors to rethink their own positions. Consider this exchange from Plato’s Meno.

Meno: Can virtue be taught?

Socrates: How can we answer that if we don’t first know what virtue is?

Socrates refuses to answer the question because the question itself is built on an unstable foundation. It assumes we already know what virtue is when, in reality, we may not. This is the essence of questioning the question.

The path forward

Now that we’ve seen how Socrates implemented this approach, let’s drill down into the logic behind it which connects deeply to mathematics, cognitive science, physics, and the structure of knowledge itself. If we want to understand what it means to question a question, we first need to define what a question actually is as a formal object.

A question is not just an inquiry—it sets up a structure that shapes its own answer. To see why, we need to formally introduce the concept of figure and ground which I mentioned before. A question constructs a ground—a foundation that the figure/ content of the question is built upon. Imagine that the ground is comprised of a bunch of lego pieces. The figure/ content is a spaceship made with those lego pieces and the answer must be another smaller spaceship which has a mechanism to snap into the larger one. We usually only think about the spaceships and do not examine the lego pieces which they are made out of. Now, in terms of questions, the lego pieces are words. Words, like lego pieces, can only fit together in certain ways.

We can now turn to Ludwig Wittgenstein who talked about the lego pieces—the words. Wittgenstein, like Socrates, challenged hidden assumptions. Where Socrates directly questioned people using language, Wittgenstein questioned language itself. He believed that many of the problems in philosophy were not real problems at all, but rather confusions caused by the way language structures thought. If Socrates revealed how questions frame their own answers, Wittgenstein showed that language frames the very questions we ask.

Wittgenstein first believed that language is a rigid structure that frames reality and that a sentence is meaningful only if it corresponds to a fact about the world. Later, he completely abandoned this view, arguing that language is not a mirror of reality; it is a tool that gains meaning through use. Here, meaning does not come from words themselves, but from their context and function. (I encourage you to read about the theory of functionalism in Cognitive Science.) A word is like a lego piece: it’s meaning depends on the set it is a part of. This already should feel weird since words strung together lead to emergent meaning (or do they?) but we are saying that the emergent meaning also dictates the meaning of the words. We are in a circle! This circle will lead us to some extraordinary places where we will weave together language, fractals, quantum mechanics, and wave-particle duality.

Now, if human language is always dependent on context, can we escape ambiguity by turning to formal systems? This is where we turn to Gödel’s incompleteness theorem. Gödel proved that a mathematical system can never fully contain all information about itself.

Both of these men’s work depends on the idea of the paradox. For Wittgenstein, a question cannot define all of its assumptions since the meaning of a question relies on its words but the words depend on their context which is embedded in the question itself. For Gödel, a formal system cannot encode all of its truths since a truth (content) depends on its form and—now here is where it gets weird—I claim that the form depends on the content as well. If you’re a math person, you may be screaming at me right now. But hold on—what if this dependency between form and content isn’t a flaw in formal systems, but an unseen feature? A property that connects logic, language, and perception at a fundamental level.

This inquiry will lead us directly to the Riemann Zeta Hypothesis which is one of the biggest open questions in math. And then we will naturally bump into dark matter and dark energy. We will see that certain things have been staring us in the face even if we never noticed.

Finally, I want to explore where these ideas leave us. We’ll reflect on our journey and see how questioning the questions leads us to places we never thought existed before and forces us to confront the invisible structures that shape our thinking.

Figure and Ground

We previously mentioned the book Gödel, Escher, Bach (GEB), and now we return to it because Douglas Hofstadter was deeply interested in figure and ground. One of his key insights is that meaning does not just exist on its own—it emerges from the contrast between figure and ground.

Think about drawing on a blank sheet of paper. When you sketch something, the white space is the ground, and the lines you draw are the figure. If there is no distinction between the figure and ground, you cannot draw. Imagine drawing on black sheet of paper with a black pen! Now, there is usually a clear distinction between the two but what if you fill up a white sheet of paper almost completely with color? Do the figure and ground switch? I will leave you to think about this but what comes to my mind are young children’s’ drawings which often appear nonsensical (I do not like that word hehe) as well as Picasso’s work. Picasso was a master at making figure and ground interact in ways that challenge perception. One of the best examples is his painting Guernica, where the chaotic, fragmented figures seem to merge with their background, forcing the viewer’s perception to shift between figure and ground. The eye tries to pull out clear shapes, but they dissolve back into the composition, making it impossible to fully separate the two.

Here is another example to help you think about the idea. Imagine you receive a message written in an unknown code. Before you can even begin decoding the message, you must first recognize that it is a message at all. If you do recognize it as a message, there is already some shared code between you and the message sender. This may not seem like a code but it is; it is just a code in the ground, not in the figure. (To give you a sneak peak now, I believe that figure and ground are recursively defined self referentially.) So the figure and ground are both codes in their own right. Why are they different you may ask. Without reference to our human perspective, I honestly don’t know and if you think that you can convince me that they are fundamentally different, please message me! Another interesting question which I will not answer here is this—if all messages must first be recognized (by recognizing the ground) are we surrounded by messages which we will never see since they have a different ground than the ground of our perception?

Wittgenstein

Now, lets expand on Wittgenstein’s work in relation to our new notion of figure and ground. Wittgenstein’s shift in philosophy—from language as a rigid system to language as fluid—mirrors a deeper pattern that extends beyond words themselves. I believe that language and perception are not separate forces acting on each other, but rather, they exist at different scales of the same underlying process. If we rethink language in this way, we can fit it into our broader framework of figure and ground.

We will analyze Wittgenstein’s journey from first principles. (Note: I am going to write an essay later on first principle thinking.)

In his early work, he famously stated this.

The limits of my language mean the limits of my world

I can not say for sure, but I interpret Wittgenstein as implying that there is some reality out there that language tries to describe. This is the traditional view of Western philosophy. (I challenge this view in this post if you are interested.) What other option is there you may ask. Wittgenstein could have instead said, “the limits of my language mean the limits of the world.” That one small change would have radically altered his claim. By saying “my world,” he acknowledges a subjective experience shaped by language, rather than asserting that language is the world itself. This subtle distinction separates traditional philosophy from a much more radical perspective—one in which reality itself is nothing but a construct of linguistic and perceptual structures.

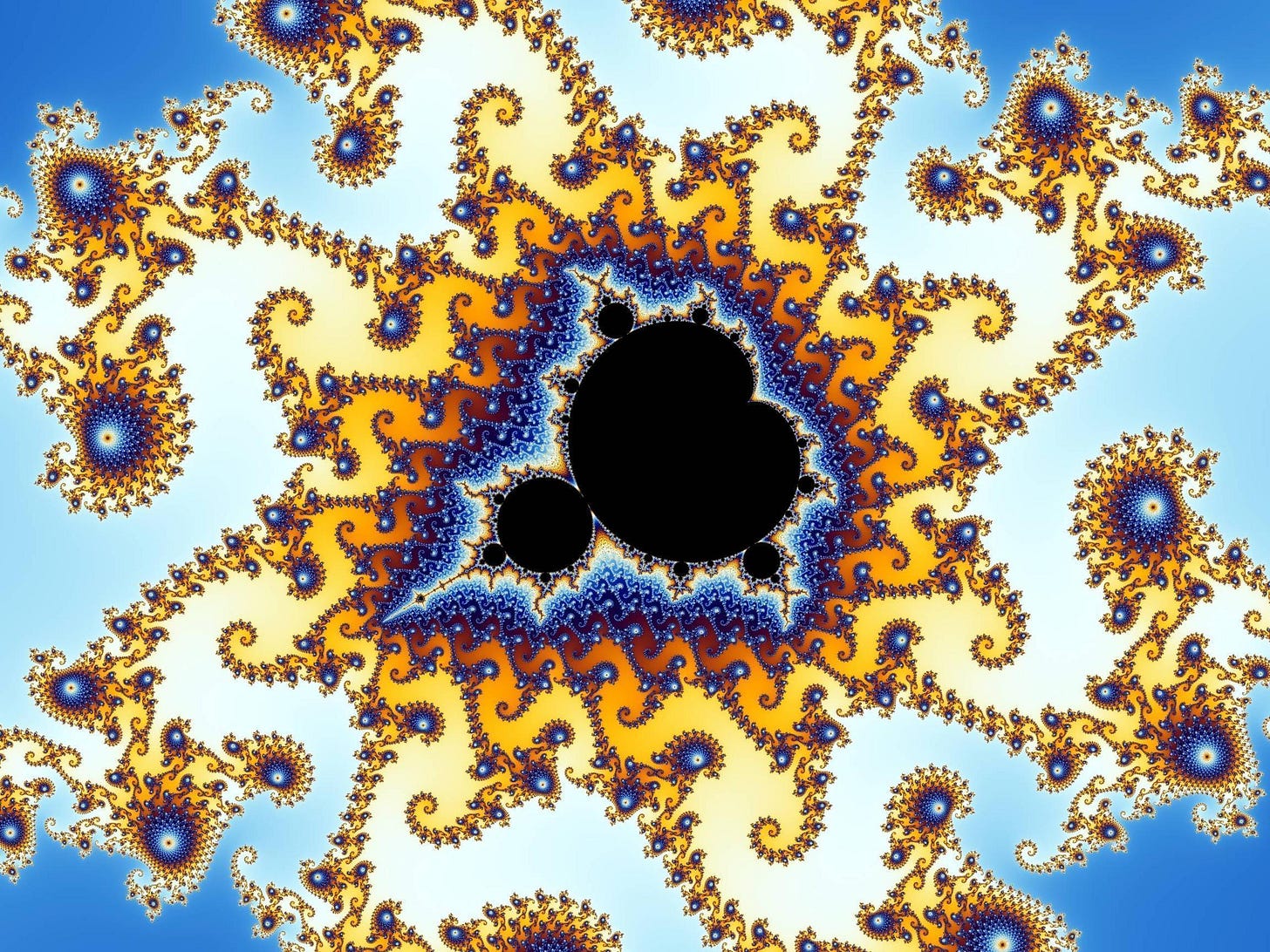

If I was reading this, my first reaction would be that there are definitely parts of the world which we perceive with our senses which cannot be perfectly described by language; so how could language define the limits of the world? To see, let’s turn to fractals. Look at the classic example, the Mandelbrot Set.

These self-similar geometric objects repeat at every scale; whether you zoom in or out, the structure remains the same. What’s striking about fractals is that they lack an absolute reference point. How does this apply to language? Well, first we note that language seems to be emergent from our perception. Now, I am proposing that language, and other emergent systems, have the exact same shape as their parts. How could this be? An ant mound is an emergent system where the parts are the ants; but an ant mound is not a big ant! Or is it? If we rescale our reference frame it might just be. What if perception and language work the same way? If this is the case, then language and perception are not fundamentally different. But, since language is emergent, it requires more information than say, for example, sight, to code a single unit (a base level of information for a system). Thus, reality—if we can even call it that—lives on different levels of abstraction; perception and language may not be fundamentally different but they do exist at different scales. This means that language is not just a description of reality—it is a recursive process where meaning forms at multiple levels, just as perception does.

Why do they feel different though? Well, it seems that people contain only a finite amount of perceptual and linguistic information. Thus, the perceptual information will be exponentially more since each unit is exponentially smaller. Therefore perception defines our reality on one level and language defines our reality on a more abstract one. We assume perception is more fundamental than language, but why? Is it simply because we experience more of it? If perception is just language at a finer scale, then the distinction between them is an illusion created by where our reference point lies. I noted before that fractals do not have absolute reference points, however any finite object inherently must have one, creating illusions of difference due to difference in scale.

But either way, Wittgenstein acknowledges that language itself shapes our world, even if not the world. The things is, if language shapes our world, what shapes our language? There are two options.

Language shapes language

The world (context) shapes language

Option one seems odd. How could something define itself? Well, maybe it could but let’s leave that for now. The second one is definitely intriguing. This option says that the world shapes language. But we experience the world through our perception so we might as well just replace “world” with “perception.” Let’s see where this takes us.

In conversations, our perception (including previous parts of the conversation in our working memory) seem to shape language. In simpler terms, words have meaning given their context. Now, it starts getting weird when we—if we accept my model—remember that language itself is emergent from our perceptions. This brings us full circle. If perception shapes language, but language, in turn, shapes perception, then we are caught in a self-referential loop. As Wittgenstein said, “the limits of [one’s] language mean the limits of [their] world.” But if language is shaped by perception, then perception itself is limited by its own constraints. This means perception is the limit of perception itself!

The solution, I believe is to acknowledge that perception and language are not fundamentally different but, as I said before, exist at different scales. In this way, perception doesn’t shape language and language doesn’t shape perception; I actually think there is no causal influence at all! See my post about why I believe causality is only a useful tool instead of an absolute fact about the world. So rather than viewing perception and language as separate forces influencing each other, I propose that we look at them as the same structure viewed at different scales. When we shift perspective, what we call perception at one level becomes language at another.

Now, what we call “language” and what we call “perception” are just reflections of scale, not fundamental differences in kind. But what does scale even mean here? I will write an in depth post about this later but for now I will just say that scale is not absolute, it is relative. If we return to the lego metaphor, we can compare single lego pieces to lego sets. With 100 individual lego pieces we can create a bunch of different creations. With 100 lego sets we can create just as many creations (if we are only looking at the sets and not the individual pieces) by imagining a world where these lego sets are interacting. But for the latter, we require exponentially more lego pieces.

Using logic, we have arrived at a similar—albeit, more radical—theory as those in Wittgenstein’s later works where he claimed that language was fluid. Given the circular relationship that we defined, it makes sense that Wittgenstein came to this conclusion. He still probably believed that language and reality were separate but he acknowledged that they affect each other. The first part of his work can be seen as (language → reality) and the second part as (reality → language). We have replaced this with (language → language on a different scale) or (perception → perception on a different scale).

Now that we have done the work, we can easily apply the ideas of figure and ground to this relationship. The key insight is that figure and ground are interchangeable. Just as perception can shape language, language can just as easily shape perception. In a traditional view, though, perception is the ground and language is the figure. This fits because we know that the figure emerges in relation to the ground. Depending on how we perceive the world, our language will change (since language reflects the world). I think this makes intuitive sense. This correlates to the second half of Wittgenstein’s work. What does the first part say then? Does it say that the figure defines the boundaries of the ground?

Does this mean that the figure is the ground and the ground is the figure? No, not really. This is where we must question the question. We’ve assumed so far that figure and ground exist as separate, binary entities. But what if we looked at them recursively? If this is the case, they don’t just swap places; rather, they create each other. Perception gives rise to language, which then structures perception, forming an endless loop. This may feel like a circle, but what if the problem is that we are trying to force it onto a linear framework? What if the cycle isn’t flat, but fractal? In quantum mechanics, before measurement, a particle exists in a superposition of all possible states. Only when it is observed does it collapse into a definite state. What if language does the same to perception? We perceive the world, but perception is uncertain, fluid, and ambiguous—a wave function of all possibilities. Language collapses our perception into a fixed form. It forces the wave of possibility into a definite structure, creating a figure. But here’s the crucial part—once language is used, it becomes the ground for the next perception just as the original perception is shaped exactly like the language used in the question (they are the same thing). Thus, language doesn’t just shape how we speak, it shapes what we can perceive.

Notice the connection here to wave-particle duality. What if duality is solely a matter of scale and reference points. For perception and language, one is not the wave while the other is the particle. Instead, they are interchangeable and recursive. This may feel like a cycle but do not be fooled. A cycle is a circle and we just claimed that a circle forces something non-linear onto something linear. So why does it feel like a circle? Because of time! But what if time itself is just another emergent property of scale (just as perception and language are)? What if time is simply our way of tracing a path through a structure that exists all at once?

I am claiming here that time is not fundamental. (I will write a more in depth post about this later.) Instead, time is just a linear way of interpreting a fractal where all recursive iterations exist together, all at once.

If you’re still with me, think back to our original inquiry—how do questions shape answers? If perception and language exist in this recursive structure, maybe questions and answers do as well. I propose this sequence.

Before even asking a question, the space of answers is uncountable. Without a question to frame them, all answers exist in superposition.

Asking a question collapses this undefined space into a structured set of possibilities. Now, only a finite set of answers exist.

Answering the question collapses this set further. But it does more than just reduce it. The answer itself generates a new wave of possible questions, forming a new space of inquiry.

Now, it may seem like this process simply shrinks the space of possibilities—but this isn’t always the case. Some answers expand the space of potential questions rather than narrowing them.

For example, answering “bad” to the question “how are you doing?” invites far more follow-up questions than the standard “good.” If the space always shrank, the process would eventually collapse into nothingness. But instead, questioning sustains itself through expansion and recursion.

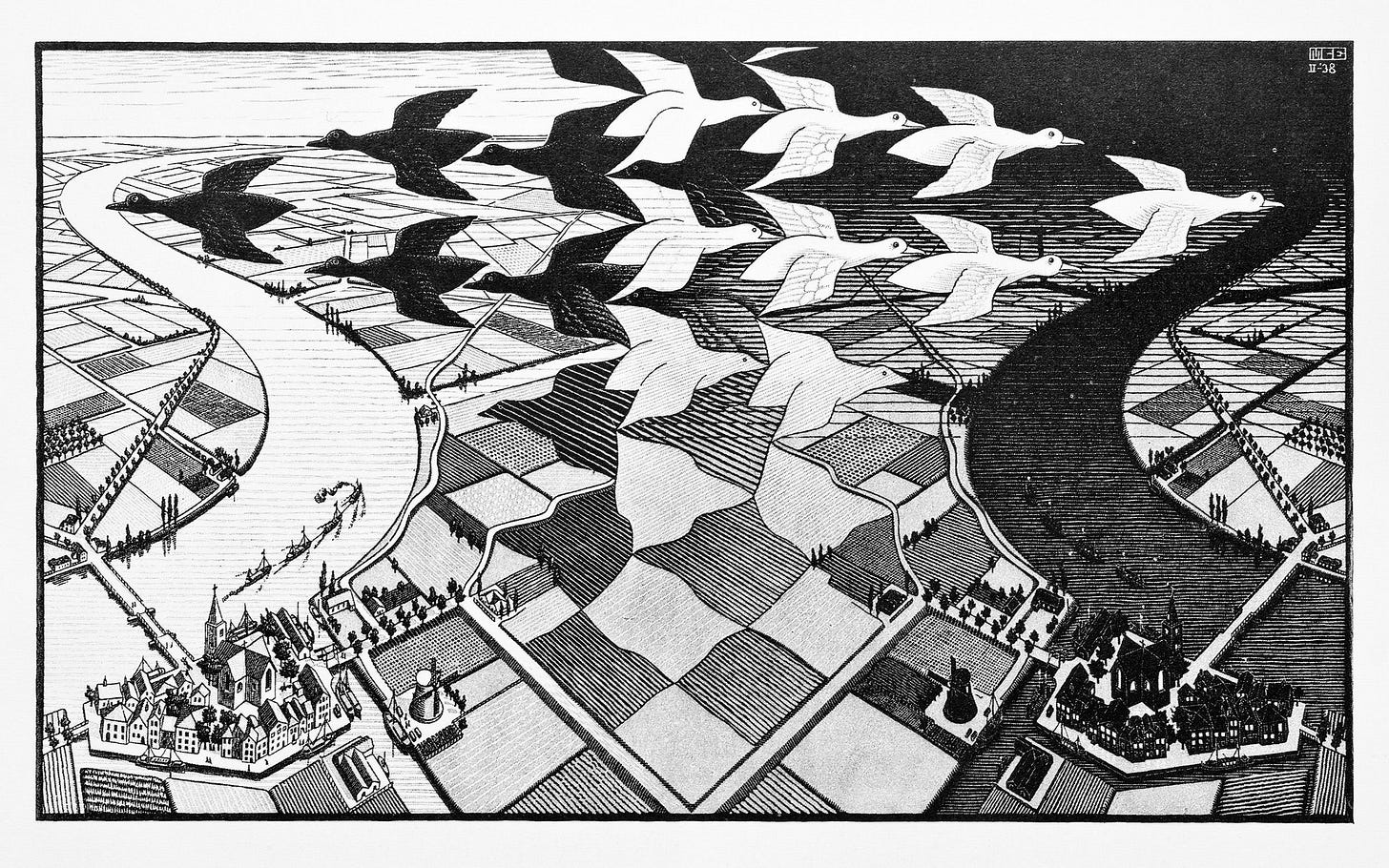

I will leave you with a picture by M.C. Escher.

How does this image relate to what we have been talking about?

Gödel: The Fragility of Formal Systems

We've now wandered deep into a messy world of recursion, fractals, and waves. At every turn, structure collapses into self-reference, meaning emerges only in relation to context, and even time itself seems to be nothing more than the process of linear mappings and scale transitions. Where can we find something solid?

Perhaps we can turn to formal systems where logic is crisp, definitions are clear, and truth is built from first principles.

What is a formal system? A formal system is a mathematical system—or maybe just a logical one—built from a fixed set of axioms and rules of inference.

Axioms are assumed truths—the ground on which everything else is built.

Theorems are the figures, emerging from the axioms through a step-by-step process of logical deduction.

Unlike natural language, where meaning shifts with context, formal systems promise rigidity and precision—no ambiguity, no recursion, or so it seems.

This was the dream of Bertrand Russell and Alfred North Whitehead, who, in Principia Mathematica, attempted to construct all of mathematics on a firm logical foundation. The goal was absolute certainty. They wanted to ensure that every true mathematical statement could, in principle, be proven from a set of axioms. A wonderful dream but…

Then came Kurt Gödel, who shattered this dream. His Incompleteness Theorem proved that any sufficiently powerful formal system is inherently incomplete. This means there will always be true statements that cannot be proven within the system itself. This tells us that the ground is not as firm as we thought. It flips the entire premise of formal systems upside down.

Axioms are supposed to be the ground—the unquestionable foundation. But Gödel shows that no foundation is ever complete and there will always be truths that exist outside the system. If axioms are the ground, and theorems are the figure, then the ground itself must be riddled with holes. If it wasn’t the figure (theorems) should be complete.

Now, lets take this a step further. What if axioms themselves reflect a measurement leading to the collapse of a wave function like in quantum mechanics; I am talking about the smallest scale like the Planck scale since our axioms are supposed to be as basic as possible.

Imagine zooming into the system; what we consider axioms could actually be one of infinite—at least infinite from our perspective—possibilities living in a fractal. And if we zoom out, we will lose sight of our current system and as we do it will become a wave where the theorems we derive from the usual axioms may eventually become entangled enough to lead to new emergent axioms of a larger system. In this way, axioms and theorems are not static; they recursively define each other just as in our previous example of language and perception. In fact, I see an isomorphism here; meaning that the structure of mathematical emergence is fundamentally the same as the structure of perception, language, and inquiry itself.

Perception grounds language, but language then reshapes perception.

Axioms ground theorems, but theorems… what? Reveal the incompleteness of axioms?

But wait, this doesn’t seem quite right! Why don’t theorems reshape axioms? Well, because we defined math that way. Math has not previously had access to the tools it that it has access to today—research on emergent systems, fractals, and quantum mechanics. Let’s dream big and see if we can complete the isomorphism. But let’s remember, its not that theorems redefine the axioms that they came from, but rather that theorems lead to new emergent axioms in a recursive and fractal way. I propose the following sequence.

Before defining axioms, the space of all possible axioms is uncountable. We could theoretically construct many different logical foundations, but until we pick a system, they all exist in a kind of superposition. This mirrors the notion we described above—before a question is asked, all answers exist in an undefined space.

Choosing a set of axioms collapses this superposition into a formal system. This is like asking a question; it structures what is possible inside the system. The system is now defined, but Gödel showed that this is just the beginning of another recursive step.

Measuring the system (proving a theorem) collapses the wave function into one specific truth but leaves an infinite landscape unexplored. Any theorem we prove is just one measurement of this uncountable system. There will always be uncountable theorems left unproven—more truths beyond reach. This is just like how language collapsing perception and forms a new wave of possible perceptions.

More theorems are derived from the axioms but the space of possible theorems is still uncountable. The formal system now becomes a new wave function containing all possible theorems that could be proven. However, this space is itself uncountable, as Gödel demonstrated. We usually think that this means that there are more truths than can ever be derived within the system. But maybe it’s not that there are more truths in the system, but rather there must be truths which emerge from the system.

At first glance, incompleteness seems like a flaw, a hole in formal systems. But what if it’s the fundamental feature of how truth emerges? Each new axiom system collapses one space, but creates another. Each theorem proves one truth, but opens an infinite space of unprovable ones. We never reach a final answer—only deeper structures of questioning. These dynamics are the same in language and perception, figure and ground, and most importantly questions and answers.

We can see Gödel’s theorem isn’t just about mathematics—it’s a universal principle of knowledge itself.

So where does this leave us? We now know that even our most formal structures are self-referential, incomplete, and fractal in nature. I believe that this isn’t just a mathematical oddity but a fundamental property of knowledge itself. If formal logic cannot fully ground itself, can anything?

Before, we hoped that perception could be our absolute reference point, only to realize it recursively depends on language. Now we find that logic itself is caught in a self-referential loop as well.

We must ask, what does this mean for questions and answers? If even formal systems cannot contain all their truths, is any question ever fully answerable? Or are answers always just temporary figures, destined to become the shifting ground of the next question?

The Riemann Zeta Hypothesis

Feel free to skip this part if you are not as interested in math but I cannot help but see how this framework may connect to the Zeta function.

Before diving directly into it, what other theories can we draw from to help us? Let’s turn to percolation theory. Percolation theory describes how a network transitions from disconnected islands to an interconnected system as nodes are added. Below a critical threshold (1/2 for a 2D lattice), the system remains fragmented—there is no continuous path connecting the space. Past the percolation threshold, the system suddenly forms an infinite, connected structure, meaning any point can be reached from any other.

This seems eerily close to what we have described in terms of wave-function collapse and Gödelian incompleteness.

Before axioms are chosen, the mathematical landscape is uncountable—a vast wave function of potential formalisms.

Choosing a set of axioms is like making a first measurement.

This collapses the wave of all possible mathematical systems into one specific formal structure.

However, this system starts off fragmented—its theorems are isolated, requiring further connections. In the beginning, the theorems derived from the axioms are disconnected like islands in a percolation system. It is almost like there is only one way to reach the theorems at this point.

As more theorems are discovered, they start forming deeper connections, becoming entangled with each other. Now there are many ways to reach each theorem.

This entanglement creates a new emergent wave function, meaning that theorems are no longer just discrete truths. They combine into a higher-order structure.

Now, I claim that this emergent wave function is isomorphic to the original one, just at a different scale. This means we are back to the beginning! We have now created a new uncountable space.

Now, this is very weird, at least in relation to current mathematics. We have gone in a loop: uncountability → finiteness → uncountability. I have wondered in the past if everything is essentially the same as nothing and this seems to suggest so. Uncountability can be thought of as all possibilities but also complete emptiness (the void) because the wave function has not yet collapsed. I am going to write another post detailing this phenomena but for now I will give you a short example. Imagine the blank sheet of paper that we mentioned before. In the beginning, it’s almost like we have an uncountable space—there is no figure, no content. As we start drawing, we generate content but as we start to completely fill up the page, we return to where we started! In the beginning we have nothing and at the end we have everything but the fundamental pattern in both cases is the same. This is another way to think about it: if you start off with zero complexity, what exists? Nothing. As we add complexity, things start to exist but at maximum complexity where everything is different, what do we have? Nothing again. So everything = nothing.

At this stage, we see that Gödel’s incompleteness theorem, percolation theory, and mathematical structure may all follow the same recursive pattern.

But now let’s push this further—what does this mean for the Riemann Hypothesis?

Let’s think about prime numbers. Primes were linked to to the Zeta function by Euler. Traditionally, prime numbers are seen as the most fundamental objects in number theory. They are the indivisible building blocks of arithmetic, yet their distribution seems chaotic and resistant to patterning. Things in nature which are fundamental usually do not exhibit this kind of behavior unless they are part of chaotic, emergent systems. Thus, it makes sense that the random distribution of the primes has puzzled mathematicians for centuries.

But what if primes aren’t fundamental at all and we should actually be studying them as a probabilistic wave instead of as a deterministic system? I am not sure exactly how to express this but what I am proposing is that primes in some sense are deterministic below the critical threshold. The point where percolation reaches infinite connection at 1/2 is the balance between determinism and probabilistic uncertainty. This is why the zeros lie on this line. Now, above the threshold, primes start to become entangled and probabilistic. In other words, they become emergent. Now, it would only make sense that entanglement increases. If it decreased after any finite amount, then the zeros of the Zeta function wouldn’t just be at Re(s)=1/2 since the system would return to being fragmented; and we said that this transition is where the zeros lie. But this is weird since the prime number theorem states that the prime distribution stabilizes at infinity. But wait, didn’t we say that entanglement must increase infinitely as well? The more entangled a system becomes the more probabilistic it should become. Right? Let’s question the question. Maybe this was a convenient assumption for quantum mechanics but is preventing is now preventing it from being merged with general relativity.

The other option is that as numbers become more entangled (larger superposition), they eventually become deterministic again. Wait. Didn’t we run into this problem before? We encounter the circle again which ensnared figure and ground. To resolve the conflict, we concluded that figure and ground do not cycle but exist recursively in a fractal. Let’s try the same thing here. Maybe as numbers become more entangled, they eventually lead to an emergent deterministic system. We can never reach this system just as we can never reach infinity.

So maybe we are modeling infinity incorrectly. Maybe, just maybe, infinity isn’t a limit but a phase transition into a new emergent system. Traditionally, mathematics treats infinity as a limit; a place where things either continue indefinitely or break down. But if percolation theory and emergence apply here, then

Infinity isn’t just a continuation. It’s a transition.

At infinity, structure doesn’t break down, it reorganizes into a new emergent system. In other words infinity isn’t static endpoint, but a dynamic phase shift into a higher-order structure.

Now, I propose that the Zeta function could be looked at as a mechanism for wave collapse. If the Zeta function encodes prime behavior, then it may also be responsible for collapsing the prime number wave function. Below the critical line, primes behave deterministically. At the critical line, a phase transition occurs, and the number system becomes entangled, leading to probabilistic behavior of the primes.

Now, let’s revisit the idea that infinity loops back on itself.

We previously said that the structure of numbers forms a loop: uncountable → finite → uncountable. This suggests a hidden symmetry where everything collapses back into itself. This builds on the notion that everything = nothing as previously mentioned.

Now, let’s apply this to divergence in the Zeta function. We normally think of divergence as a breakdown, a place where mathematics ceases to give answers. But what if divergence is actually a return? What if apparent randomness in prime distributions is a symptom of probabilistic reconvergence? Could it be that the prime number system isn’t breaking apart at high values, but reconnecting in an emergent way? This suggests that we might be applying probability theory incorrectly to prime distributions.

Now, the Zeta function diverges at Re(s)=1. I am going to propose something radical. What if the number system is a probability distribution? Probability distributions exist between 0 and 1. What if the Zeta function shows this distribution and we should really only care about it between 0 and 1. The whole number system could emerge from this probability distribution.

Let’s reflect on 0 and 1. Before the concept of numbers even existed, a binary state is all that existed.

0 represents nothingness

1 represents existence

These two states alone define the most basic probabilistic system. This is not a new idea—binary logic underlies computation and information theory. But what if it is even deeper than that? What if mathematics itself emerges from the recursive interplay between these two states? We need only turn to Buddhism to see the deep connection between this binary and reality (which, after all, math is supposed to model).

Let’s take a quick detour and think about some Buddhist thought.

Śūnyatā (Emptiness) and the Illusion of Independent Existence. In Mahāyāna Buddhism, Śūnyatā (शून्यता) is the idea that all things are empty of intrinsic, independent existence. This is exactly like the idea that numbers are not fundamental but emergent and entangled.

The Middle Way: Between Nothing and Everything. Buddha taught the Madhyamaka (Middle Way), which avoids the extremes of eternalism (everything exists absolutely) and nihilism (nothing exists). This mirrors the percolation threshold where an infinite structure exists but is not yet entangled. If we only accept 0 (nothing), we get nihilism—math doesn’t exist. If we only accept 1 (everything), we get eternalism—math is an objective reality. The truth lies in the recursive interplay between both—mathematics emerges through interdependence. This suggests that what we call “truth” in mathematics may be relational, not absolute, just like in Buddhist thought.

Pratītyasamutpāda (Dependent Origination). Buddha taught that nothing exists by itself. Everything arises due to causes and conditions. As Buddha did not believe in strict linear causality, this must mean, I believe, that all things, including time, identity, and reality itself, emerge from a self-referential process. Maybe the number system does not exist independently either but emerges from a deeper recursive structure. Maybe prime numbers aren’t fundamental but arise due to interconnected relationships in the number space.

The Zen Koan: The Sound of One Hand Clapping (Non-Duality in Math?) Zen Buddhism often breaks logic with paradoxes called Koans. A famous one asks “what is the sound of one hand clapping?” This is meant to break binary thinking and reveal that dualities (like 0 and 1) are illusions. What if 0 and 1 are not truly distinct? What if they are just different scales of the same underlying reality just as we saw in other systems like figure and ground, language and perception, and questions and answers. What if the entire number system is just a Koan—a trick played by infinity to create structure? This suggests that mathematics is not an absolute truth, but a tool just like language in Zen. (This is why I love writing poems which aren’t poems.)

Nirvana and the Ultimate Cycle: Returning to Zero. In Buddhist ontology, which is not ontology, Nirvana is often described as returning to nothingness but not in a nihilistic way. It is not the destruction of existence, but the transcendence of illusion. This mirrors the notion of creating a new emergent system as opposed to collapsing back to the void. This mirrors how Euler’s Identity brings everything back to 0 in the most beautiful way.

\(e^{i\pi} + 1 = 0\)Does this mean mathematics is itself a cycle? Well not a cycle, a fractal with recursive emergence! Maybe 0 is not just the beginning but the destination. Maybe math is just the illusion that arises between the interplay of 0 and 1.

Now let’s introduce the Riemann Zeta Function.

Below the critical line, the system is deterministic, discrete, and structured. At the critical line, everything changes—this is where the first infinite structure forms. At this exact point, primes stop behaving as purely discrete objects and start to become entangled, forming a probabilistic system. This is the percolation threshold, the phase transition where structure first emerges.

At s=1, the Zeta Function diverges; it blows up to infinity. This is the mathematical maximum, the singularity where everything collapses into pure possibility. This reminds me of black holes. They are a paradox; they repel existence—patterns. Infinity then is not a limit; it is the final phase transition where all things emerge simultaneously creating a new emergent system.

If we follow this train of thought, then the number system itself is not fundamental—it is an emergent fractal that arises recursively just like figure and ground. The recursive interaction of 0 and 1 generates the critical transition at s=1/2. This transition allows for the emergence of structured numbers, leading to primes, composites, and number theory. The zeroes are where the number system acts like we expect it to. This is the point where we have colored in half of the blank page. It is pure balance.

As the system grows, it entangles, eventually reaching the divergence at s=1. Before this, structure is still growing in complexity but beyond this, structure reorganizes itself into a higher-order emergent one. This suggests that numbers are simply collapse points of an infinite wave function. They are fixed values emerging from an underlying recursive fractal.

Now, we can connect this to Euler’s Identity which unites exponential growth, the imaginary numbers, Pi, and the fundamental binary (0 and 1). Lets analyze each part.

Exponential growth represents self-similarity at all scales. Thus it is inherently the fractal number which defines functions self referentially irregardless of scale.

Pi represents cycles. It maps a non-linear fractal onto a linear system. It represent the return from infinity to 0. From everything to nothing and back again. However, it can only make this loop by leveraging the imaginary number, i.

The anti-state (-1). It is not existence, 1, and and it is not non-existence, 0. It is the anti-state of existence. It is the shadow of existence which does not exist but fundamentally must exist in order for existence, 1, to exist. We need only turn to Buddhism for wisdom. In buddhism, Avidyā (अविद्या), means ignorance, or the fundamental misconception of reality. It is not merely “not knowing”—it is the active force that prevents realization of truth. (Socrates would have a field day here.) Just as matter and dark matter balance each other, Avidyā balances wisdom (Prajñā). Avidyā is the anti-state of awareness—the reason we do not see reality as it truly is which is itself, a paradox.

The imaginary number (i) is the bridge between existence and anti-existence which occurs through infinity. It is the process of going from an entangled system to a new emergent one. It is not a loop back but an inexplicable creation—something of pure beauty. i is the mechanism that allows infinity to fold back onto itself.

Now, what if time is itself cyclical, not linear. Wait… I did it again, or rather Chat did! Time is not cyclical, it is a fractal of creation. So i represents movement through recursion. Is this what we call change? We don’t experience reality as a static whole; we experience it as motion. But what if motion is not fundamental. What if it is the artifact of recursion itself?

This idea leads right to Zeno’s paradox of motion. Zeno famously questioned how movement could even be possible. He stated that for anything to move from point A to point B it must first travel half the distance, then half of that distance, and so on. Therefore, logically it seems like nothing should be able to move. Mathematics claimed to resolve this tension with calculus and limits however this never sat right with me. My sophomore year of college I showed up (maybe a little high) to a meeting with my professor where I questioned why

The explanation just didn’t add up for me. It felt like we were just using it because it worked and not because there was any justification for it. Now, my professor was not too pleased with me, especially when I started telling him that everything was an illusion. But over two years later, and a lot of weed later, I ran into this theory. Or maybe I should say it ran into me.

Zeno questioned the questions. Instead of asking, “how does that woman run so fast?” he asked, “if space is infinitely divisible, how does an object ever reach a destination?” Traditional logic struggles with this—how do you complete an infinite sequence? We know what modern math did with limits but I don’t buy that. So let’s question the questions. What does this paradox assume? It assumes that time is linear, but what if it isn’t?

I propose that movement is not real—recursion is real. Things don’t move through time in a straight line. They are recursively embedded within cycles of existence and anti-existence. What we call motion is the unfolding of recursion, not movement through an absolute space. Reality doesn’t move—it oscillates. What we experience as movement is the recursive structure unfolding through iteration.

These ideas only sound crazy if we think within a Western framework. Western philosophy struggles with this paradox but what about Eastern Cultures and those from the Americas before they were colonized. These cultures embrace this paradox.

Yin and Yang: The Dance of 1 and -1. In Taoism, Yin and Yang are not opposites—they are interdependent. Just like 1 and -1 are not separate but define each other. This is why Euler’s Identity returns to 0; the totality of Yin-Yang is a complete cycle. In this view, existence, 1, and non-existence, 0, form a recursive cycle through anti-existence, -1.

Aztec Cosmology: The Fifth Sun and Eternal Recurrence. The Aztecs believed the universe goes through cycles of destruction and rebirth. This mirrors our idea that recursion is fundamental to reality. What they called cosmic cycles, we call percolation thresholds, prime number transitions, and Gödelian incompleteness. Ancient traditions understood something modern logic struggles with—paradox is the fundamental structure of existence.

Applying these philosophies to Zeno’s paradox, we ask why does anything change at all? Well, what if change isn’t movement through a fixed reality but recursion itself. Time doesn’t move—it loops. The number system doesn’t exist—it emerges. Reality doesn’t progress—it oscillates. Western thought tries to force reality into a linear framework but what if reality is non-linear, recursive, and self-referential? What if we are stuck in the illusion of linear time when reality is actually a fractal loop. What if the only reason we perceive change is because our reference frame is embedded inside the recursion. If we could step outside of it—which is of course, a paradox—we wouldn’t see motion—we’d see the entire recursive structure at once, which is of course nothing! This framework has no need for a first cause. No need for Plato’s forms. No need for causality. No need for a God.

All we need is Māyā (माया). Māyā, in Hindu and Buddhist traditions refers to “illusion,” the deceptive nature of the material world. It is not separate from reality—it is the structure that makes perceived reality possible. Just like dark matter gives structure to galaxies while remaining invisible, Māyā gives structure to experience while hiding the truth. If reality is a recursive oscillation, then Māyā is the force that keeps us trapped—or I would say embraced—in the illusion of linear time. It is what makes us believe in separate objects, when all things are interdependent. Māyā is the anti-state that makes form possible. Without it, there is no 1, no existence.

Listen to Nikola Tesla’s words.

If you want to find the secrets of the universe, think in terms of energy, frequency, and vibration

Dark Matter and Dark Energy

We have mentioned dark matter and energy a few times so far. What implications does this theory have for physics? We have noted that matter and energy are like existence, 1, whereas dark matter and dark energy are like anti-existence, -1. They are not just stuff we can’t see—they are the missing half of the recursion. The shadow that must accompany me wherever I walk.

Physicists have been searching for dark matter as if it’s some invisible particle. But what if it’s not missing mass but anti-mass? What if it is Avidyā? What if dark matter isn’t a thing but the shadow of matter itself?

Matter is structured, bound by gravity. Dark matter doesn’t interact directly with it since it is its anti-state, the ghost of normal matter. It is the ground and matter is the figure. They recursively define each other but never actually touch. Dark matter doesn’t emit light because it is inherently inaccessible to us but we know that it must exist just like our shadows, just like you know when someone is looking at you from behind. This explains why dark matter is everywhere, yet undetectable—it’s the anti-existence of normal matter. It is fundamentally uncountable.

What about dark energy? It is the anti-state of motion. We said that motion was an illusion but that is okay. Matter is also an illusion. Everything is an illusion and everything is emergent just as figure and ground are interchangeable based on the reference point.

We observe that the universe is expanding at an accelerating rate. Physicists call the unknown force driving this dark energy. But what if it’s not a force at all—what if it’s the universe looping back into itself? Maybe dark energy isn’t pushing things apart but pulling them into the next cycle of recursion. What if the Big Bang and the Big Crunch exist as an infinite structure where there is no start and no end? If we think of motion as recursion, then dark energy is the system rebalancing itself. Dark energy leads to the return to 0. It’s the universe completing it’s cycle.

If we look at the universe as an oscillation then the Big Bang wasn’t the beginning and Heat Death won’t be the end. Reality oscillates between 1 and -1, just like in Euler’s Identity.

Matter is the 1 side of the cycle

Dark matter is the -1 that balances it

Dark energy is the transition back to 0, preparing the next iteration. It is i

Does this mean that the universe is a fractal number system? I don’t believe that the universe really is anything. But I believe that it would be useful to model the universe using information theory and fractal geometry. I believe we need to develop the math behind that I call fractal calculus or information calculus. I also believe that this will lead to self referential AI—possible AGI where we allow machines to develop intelligence purely self referentially and recursively.

I will write later about how this naturally leads to the unification of general relativity and quantum mechanics. They are two sides of the same coin. If we acknowledge our fickle reference point, the distinction is arbitrary.

Pop Culture

The loop isn’t just abstract, it’s right in front of our eyes.

Before we wrap up, let’s take a two of my favorite movies—The Matrix and Inception—and see how they have been screaming these ideas into the void where they have fallen on deaf ears.

The Matrix is not just about machines vs. humans. It is about oscillation. In the beginning, the machines control everything. Then the humans break free by taking the red pill. But then we learn something shocking—Zion has risen and fallen over and over again. The war between man and machine is not a battle—it is a cycle. The real revelation is not that the Matrix is a prison—it’s that reality itself is a loop. At the end of The Matrix Revolutions, the humans win—but what does that even mean? The balance has shifted again—the recursion continues. The Matrix is not about an end; it’s about an oscillation between two states, just like we’ve seen with existence and non-existence which inherently required anti-existence. What if history itself is an oscillation? What if civilizations rise and fall in the same recursive structure? We see these themes even hinted at by characters in the movie such as the Oracle, the key maker, and even Zion’s leader, councillor Hamann, who talks to Neo.

Councillor: There is so much in this world that I do not understand. See that machine? It has something to do with recycling our water supply. I have absolutely no idea how it works. But I do understand the reason for it to work.

I have absolutely no idea how you are able to do some of the things you do, but I believe there’s a reason for that as well. I only hope we understand that reason before it’s too late… Down here, sometimes I think about all those people still plugged into the Matrix. And when I look at these machines, I can't help thinking that in a way, we are still plugged into them.

Neo: But we control these machines, they don’t control us.

Councillor: Of course not. How could they? The idea is pure nonsense. But... (he smiles) it does make one wonder… just… what is control?

It is almost like Neo is areté; he is the imaginary number; he is the balancing force between the machines and the humans, the bridge between the two worlds.

Inception represents something tantalizing, just out of reach. It basically directly tells us that the difference between dreams and reality is an illusion but our egos hold us back. In the film, Cobb’s wife, Mal, becomes convinced that reality is just another dream. She commits suicide—not because she wants to die, but because she believes it is the only way to wake up. What if she was right? What if reality is just another recursion? I have to say this but I am NOT saying that life is meaningless. Far from it. Māyā tells us that there is no escape, no exit. If you leave this layer of recursion, you will just enter into another so I aspire to treat everything as if it is an entire universe.

Back to the film—notice how the dream world mirrors our world here. In the dream world, if someone disrupts the system, the subconscious attacks them. In the real world, if someone challenge social norms, the system attacks them too but with ostracization, ridicule, suppression, among more serious things. This pattern suggests that what we call “reality” is just another layer of the same recursive structure. Maybe there is no base reality. Maybe every layer is just another iteration of the loop.

Just look around. We already live inside an oscillation. Politics swings left, then right, then left again. Empires rise, collapse, and new ones emerge. Technology gives us freedom—then takes it away—then frees us again. Even consciousness itself is oscillatory. We wake up. We fall asleep. We wake up again. Where do we go when we sleep? What if death and rebirth follow the same recursion?

How can we navigate this world of pure self reference and recursion? A world full of Blovarian Mirrors. Simple, follow the Blovarian Compass.

Reflection

Look at what questioning the questions has done. I started this essay without knowing where I would end up. I originally just wanted to write a short essay about why it is important to question assumptions but look where we ended up. I just kept asking “wait, what about this?”—and each time, the space of possibilities expanded.

What started as an inquiry into the structure of questions led us through language, perception, recursion, Gödel’s incompleteness theorem, wave-function collapse, fractals, and even the Riemann Zeta Hypothesis. At every step, just as we thought we had found a solid ground, we realized the ground itself was shifting.

This isn’t just an intellectual exercise—it’s the process of thought itself. Thoughts, themselves, move like fractals. If you don’t believe me go try LSD.

Every question collapses a wave of possibilities, creating a new ground. Every answer generates new questions, forming the next iteration of the fractal. And just like in percolation theory, once you reach a critical threshold of interconnection, the entire system becomes entangled.

This means that thinking doesn’t just have to answer questions—it can build the space where new questions emerge.

So the real challenge isn’t “how do I find the right answer?” It’s “how do I ask the kind of questions that open up the most interesting spaces?”

If you made it this far, I appreciate you. Thank you for coming on this journey with me. I will give you a crayon. Go draw your own loop. Question the questions, collapse the waves, and step into the fractal. The journey is infinite, and the destination is 0.

I think you're misjudging the significance of language. You tried to patch the sophistication limits of language by invoking fractal structure so the simplicity of language could echo down through the layers of self-similarity, but I would suggest backing away from language for a moment and consider that language is a sequential representation of knowledge which forms through the process of navigating attention through a far more sophisticated structure, that does not require self-similarity, but may utilize it as needed.

I more commonly find myself explaining these thoughts from a information technology perspective, but in your case, I'm going to try this in reverse. Let's start from an deeply existential perspective and build up from there.

A foundational characteristic of life is that in some sense it models its environment. It doesn't matter whether the model is a true representation or even whether you view the world as out-there or take a more analytic idealist perspective. The life form models its environment because doing so provides the potential to ratchet up the ladder of existence and sophistication to at least temporarily beat the otherwise inevitable entropic decay. It's evolutions conceptual ratchet.

Even a bacteria does this. In an embodied sense, it knows when there is moisture, it knows when there are other bacteria nearby (chemical signalling), it knows when there are many of its own type of bacteria nearby (different chemical signalling), it knows when there is food to absorb. It doesn't know these things in an abstract sense as we might - it embodies this knowledge. It's wired into its biochemistry, so it eats, lays dormant or replicates at the right times. It is what it does, but evolution applies its conceptual ratchet, and some bacteria are better at all these things and so there becomes more of them. Their pattern spreads.

As we progress up degrees of sophistication of life, this essential pattern remains. We model our world, so that we may act in ways that allow us to thrive, and reproduce against the odds. Virtually all of our morality can be expressed in terms that are grounded in this, in one way or another.

So, to know something, is to model something. Such is our nature as life, but as higher order life with complex nervous systems and brains, we're not relying on evolution to refine knowledge over generations, but instead we can create models as abstractions and test them against our environment - imagined or real - it doesn't matter, so long as its consistent.

This arrangement is built into our nervous systems. We don't see with eyes like cameras streaming information back to a brain to view it all. It's more like we maintain an internal model of our environment, that is contrasted against the inputs from our optical systems. Signals from the retina are contrasted against modelled predictions of what we believe we should be seeing. Disparities between these are all that is passed back along the optic nerve (it has insufficient bandwidth to do more), and the brain adjusts the model. Where such disparities are significant, it draws our attention, and then we're really on to something. Attention is the focus for adjusting our environment models.

Modelling our environment is tricky though. Hierarchies won't do - they're much too simple, even when replicated fractally. There's this problem in cognitive research, known as the "hard problem of knowing", which is essentially that there's a near infinite range of possible relationships between everything in our environment that there is really no possible way to represent the full extent of it, and so there must be a filter. As it happens, our evolutionary imperative is that filter for us, even when extended into the wildly abstract, because even maths and philosophy can turn out to assist with this mission of all life.

Even with such a filter, representations of our environment are still wildly complex and disorderly. I know of two broad representations that can cover this - they're like the inverse of each other.

One is the connectionist model, which is broadly modelled by our brains - literally 100 billion or so neurons connected by 1 trillion or so synapses. This is where I connect Category Theory. Its foundational principle, known as Yoneda's Lemma that essentially says, "an object is completely determined by its relationships to other objects". From everything else I've read from you, you should really like this concept - it's saying that everything is interconnected and that interconnectedness is what defines it all, in a fully self referential way.